For cybersecurity pros who fight unseen cloud adversaries every day, the rise of faceless security automation might feel uncomfortably familiar. But is automation yet another shapeless enemy, this time intent on slashing cybersecurity jobs? Or is it something else?

Fortunately, the demand for cloud security experts far outstrips the supply. The smart players don’t fear automation, they embrace it as a better way to catch the bad guys. For them, creating the ideal analyst/machine partnership is the mission. But how, exactly, can automation and machine learning (ML) best contribute to cloud security workflows?

To get the answer, take a look at how an analyst typically responds to a breach. It usually goes something like this:

- Collect data: Gather information on what happened on each entity during the breach.

- Develop insights: Correlate data to establish the sequence of events – also known as the “cyber kill chain.”

- Apply wisdom: Apply deep understanding of the organization’s technology, people and processes to analyze vulnerabilities (which are seldom exclusively technical).

- Take action: Use whatever technical and interpersonal skills are needed to create durable and effective solutions.

As the tasks become less technical and more organizational, automation adds less and less value. But machine learning can dramatically ease the first two tasks: collecting data and improving insights into how breaches take place. Today, these are tedious exercises in log correlation and research. It’s grunt work, and this is where machine learning really shines.

There are a growing number of machine learning options available to cloud cybersecurity pros. To find the one that makes the best contribution to a breach response workflow, consider these three questions:

1. What does the machine learning model know?

You don’t need a doctorate in data science to know that an ML model is only as good as its inputs. If you’re predicting real estate prices, your model’s not going to work very well without the property’s square footage.

Insight quality depends on input quality. Inputs must be comprehensive and detailed enough to help the solution understand connections (between apps, processes, and data both internally and externally), relationships (high-level entity groups and functions), and behaviors (cause and effect patterns and sequences).

Today’s cloud security solutions gather inputs in one of three ways:

- Scrape log files: Cloud entities generate millions of log events that can be used as ML inputs. Unfortunately, log entries can vary wildly between vendors and the volume of logs collected generates complexity that can benefit greatly from ML-powered analysis.

- Monitor the network: Placing listeners in a cloud network (e.g. on a firewall) can give the ML model better data, but network monitoring has limitations. East-west, intra-VM and geographically fluid entities are often out of reach.

- Instrument workloads: Extracting ML inputs directly from cloud workloads maximizes reach and optimizes inputs for the model. The associated administrative overhead depends on the vendor’s implementation tools.

2. How well does the machine learning model work in your environment?

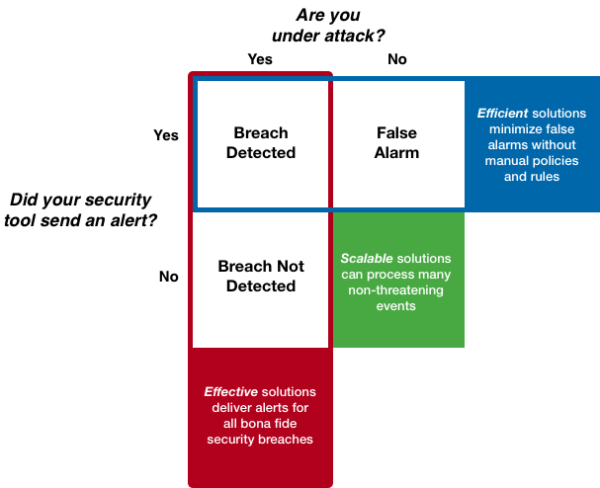

Rather than debating the merits of Bayes Classification vs. SVM algorithms, practitioners need a more pragmatic way to think about how an ML security solution will work in their cloud environment:

- Efficacy: Does the solution spot attacks or is it easy for attackers to bypass?

- Efficiency: Will the security team spend time improving protection or will they waste their days on wild goose chases and policy development?

- Scalability: Cloud offerings can scale very quickly. Can the security solution keep up?

The following chart, which shows how an ML-based security solution might process and respond to events, is one way to think about these critical questions:

From the practitioner’s perspective, this is a simple way to think about how an ML-based solution delivers on its promises.

3. What tools are available to assess an attack?

Even “perfect” breach detection—no false positives and every attack detected—won’t fix underlying vulnerabilities. To take action and remediate problems, analysts need help investigating and understanding incidents.

This is a make or break question. Overly complex solutions make it hard to develop insights. But not having enough information leads to oversimplified or inaccurate conclusions.

Here’s what to look for:

- Data aggregation to reduce cloud entities into a manageably small number of groups

- Multidimensional visualizations that show an attack from multiple perspectives (e.g., a user view showing which accounts were involved combined with a connectivity view showing which databases were involved)

- Correlation of events across multiple inputs and easy navigation between related events

- Ability to drill up or down to get visualizations from architectural perspectives down to the process

- Time-based tools to compare/contrast system states

Will automation and machine learning change how cloud security professionals do their jobs? Of course they will. The right tools will take the drudgery out of data collection and analysis, and they’ll provide better insights, faster. The analyst/machine partnership is about to get more efficient and effective. And, just possibly, more fun!

- Confronting Cloud Security Challenges in 2018 - December 30, 2017

- Three Critical Machine Learning Questions for Cybersecurity Pros - September 12, 2017

Comments are closed.