Last week I attended the IBM event with Fast Company on its hybrid cloud solutions. IBM is unique in that it often populates events like this with customers who talk about how IBM’s solutions are used rather than the far less interesting performance metrics of new hardware, software, or networking solutions. This use of customers to create content is far more compelling than the usual vendor talking head, and, in terms of credibility, people who use a technology are far more credible than those who sell it. In short, IBM’s use of customers to tell the story has for some time been a best practice.

The event mostly focused on how these companies had saved money, reduced costs, and improved their reliability, security, and operations with IBM’s hybrid cloud solutions. The customers testifying ranged from small to very large companies, which additionally showcased the amazing breadth of cloud offerings in general and IBM’s unique hybrid/multi-cloud solution.

But one interesting conversation really caught my attention. I am an ex-Internal auditor and compliance officer. Ironically, when I had that job, it was working for IBM. One of the recurring audit comments was about how employees and executives constantly miss classified documents. The discussion at IBM’s event covered using AI to fix this specific problem.

Let’s talk about that this week.

The data classification problem and how it is about to get much worse

Virtually every large company has a document and data classification policy. This policy dictates who can see the related document or file without approval and what approvals are needed for certain classified documents. This policy begins with unclassified documents that anyone internally and externally can see and continues to classification levels that, by tier, restrict these documents to fewer and fewer people, with the top classification typically being eyes-only for the CEO and select members of the board, legal or their direct reports.

Documents that are overclassified create operational friction and require approvals that, by policy, are not necessary. On the other hand, under-classified documents expose information to people who have no right to it. People overclassify because they do not understand the policy, or they somehow connect it to status rather than security (I can see it, but you do not qualify). Under-classification is often done to intentionally breach policy without getting caught, and, given audit is a sampling function, people who do this often get away with it.

What is needed is an objective way to automatically classify data.

Why data classification will get worse

This week at NVIDIA’s GTC conference, I spoke to the CEO, Jensen Huang, who told me of a coming future when we will have digital twins of ourselves that can answer questions and even have conversations in our absence. Some of the resulting avatars may not be a digital twin at all, but just a virtual customer care employee or file some other information answering role.

If the data that these avatars have access to is not properly classified, it will automatically move with the employee to other companies, be accidentally shared at scale if the right question is asked, and become a far greater security risk.

So, getting our hands around this now is critical.

The IBM AI solution

Security policy with regard to classifications is relatively simple and, as such, is an ideal place to use an AI which should, once properly trained, be able to determine by nature of the content whether a document or file needs to be classified and the optimum level of classification balancing the need to eliminate operational friction with the need to assure required confidentiality.

Not only should an AI be far more consistent with corporate policy, but it should also help refine that policy over time to better meet both the business and security needs of the firm. If there is an audit, being able to show that you consistently use the approved AI in classification should prevent you from getting an adverse audit report.

Wrapping up

Classifying documents properly has been a problem going back to when we first started doing this. People doing the classification are problematic because they may be motivated to either overclassify or under classify, resulting in inefficiencies and avoidable data breaches. Using an AI instead to do this classification should if the AI is properly trained, result in a far more consistent result and a far better balance of the operational and security needs of the firm.

In short, even though we are not yet at general AI performance, for something like document classification, a properly trained AI should now be able to both outperform a human and protect that human from making a career-ending mistake.

- CES 2026: The Year AI Got Real, Lenovo Owned the Vision, and Memory Prices Broke the Bank - January 13, 2026

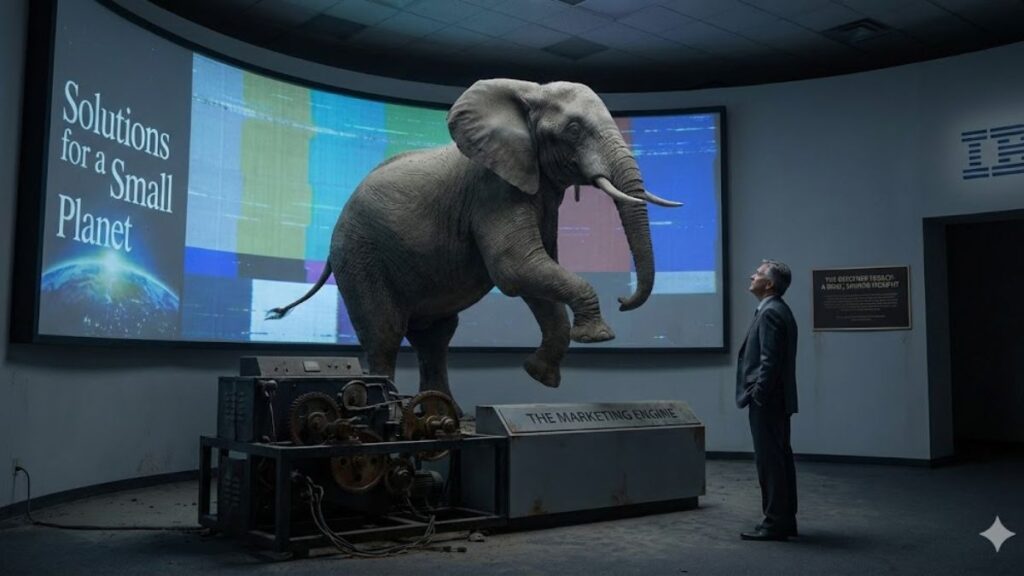

- The Gerstner Legacy: How Marketing Saved IBM and Why Tech Keeps Forgetting It - January 2, 2026

- The Silicon Manhattan Project: China’s Atomic Gamble for AI Supremacy - December 28, 2025

Comments are closed.