To say that drivers aren’t excited about autonomous cars would be an understatement. Because Tesla has been putting technology on the road that has proven impressively unsafe, folks seem to be even less trusting of this technology now than they were a year ago. But what if you could give your car a personality? What if you could change the image from a car that “was going to take you for a ride” to one that was going to more safely and interactively take you where you wanted to go?

Many of us have anthropomorphized cars for decades, and after all that time, our cars have never shown us a personality. But what if that last part changed? What if our cars, our IoT devices, and our ever-smarter homes were given personalities? For those who have played Halo on Xbox, what if we had an interface that digitally followed us and presented like Cortana, not on the PC, but in the game? You build the relationship with the AI and grow to trust it because it presents more like a person and less like the sad parodies of AIs we get in the current set of digital assistants.

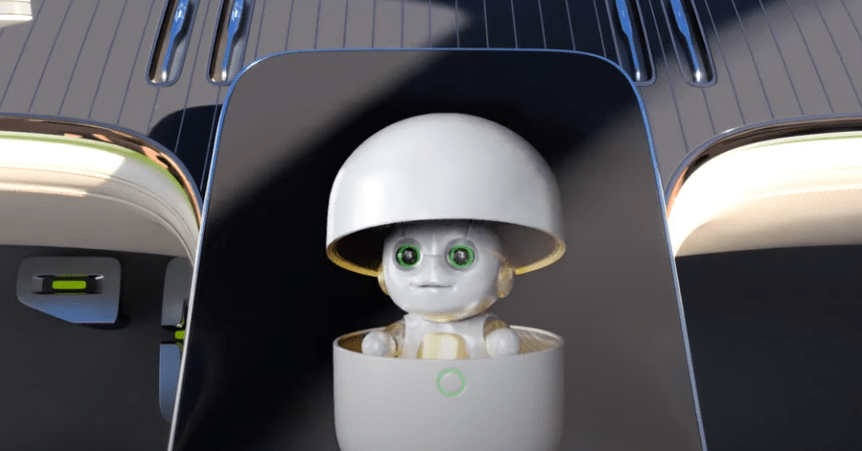

Take a look at this video of NVIDIA’s new AI. Granted, I’d prefer the Cortana interface (this is what Microsoft could have created) over what looks like an alien baby in an egg. Still, the concept coming to future autonomous cars, Smart Homes, and personal technology could change how we interface with that technology.

Let’s talk about the next generation of human-machine interfaces as represented by NVIDIA at GTC this year.

The NVIDIA Omniverse Avatar

The issue with man-machine interfaces is that we often have to spend a lot of time learning how to work with them. This machine-centric interface isn’t the future we were promised in films ranging from Forbidden Planet to Star Wars. We’ve been consistently presented with the concept of technology that would interface with us similarly to how we interface with each other.

The cars we were promised that we’d talk to were either dead relatives or articulate AIs that had our best interests at heart. What NVIDIA demonstrated could allow us to have the kind of relationship with our technology we’ve only seen in movies, and it could have any appearance we want and pretty much any voice we want as well. Jensen Huang even demonstrated an avatar version with his appearance and voice backed up by a conversational AI. This realistic, computerized avatar isn’t the future; this is possible today, though it hasn’t been fully implemented yet. It has expressions, it has hand movements, and it even has realistic clothing.

This capability could expand beyond our cars or homes. It could follow us, using our preferences to fast-food restaurants, and the level of reality can be advanced, so you no longer interface with something that looks artificial but looks like an actual person. This technology is called NVIDIA Maxine, and it could change how we interface with even the medical equipment tied to our health. This video shows how this could change how we interact with the systems around us.

Wrapping up

I think NVIDIA is on to something with NVIDIA Omniverse Avatar and NVIDIA Maxine and their host of autonomous drive technologies for cars that drive and even fly. I don’t think many of us will ever trust a technology that doesn’t interface with us as we interface with others. But suppose we can give that technology a face, give it a personality, and assure that it has our best interest at heart? In that case, most will come around to the idea that this technology can be trusted, can be used to free up our time to enjoy more things, and reduce our chances of being hurt or killed in an accident. At GTC, NVIDIA showed me our digital future, and now I can hardly wait to have a KITT, Cortana, or Maxine of my own. Maybe with my version, I can fly to work.

- The Human Element: HP’s Latest Security Report and My Near-Miss with a Digital Predator - July 11, 2025

- The Mighty Mini: Why HP’s Z2 Mini G1a Workstation Is the Unsung Hero of AI Development - July 7, 2025

- The HP OmniBook X Flip 2-in-1 16-Inch: Your New Digital Swiss Army Knife (Now in Glorious Atmospheric Blue) - June 25, 2025